Tools—are they all the same?

UX Research tooling evaluation for Wine-Searcher.

While senior designers often pride themselves on being tool agnostic, it becomes very important to understand the context, budgets and people surrounding these tools.

Covid has not just reset the our ways of working but also influenced the budgets/ROI conversations in many businesses. There are new tools with different pricing models that allow for a much wiser spending of a relatively tight budget.

Wine-Searcher is a web search engine enabling users to locate the price and availability of a given wine, whiskey, spirit or beer globally, and be directed to a business selling the alcoholic beverage.

In my role as contract design lead at Wine-Searcher, I made a deep dive to understand the business needs to expand and implement the existing UX Research practices.

Get the right tools

I researched the history of UXR at Wine-Searcher, including tooling and their use in UXR-ops. With historic changes in the teams, UXR was ignored and the team was left to stakeholder approvals to make design decisions.

There was awareness in the ELT that this has negatively impacted product design decisions. The external consultancy previously hired provided insights but could not deliver the change required in the practice for the team to continue the research efforts in BAU.

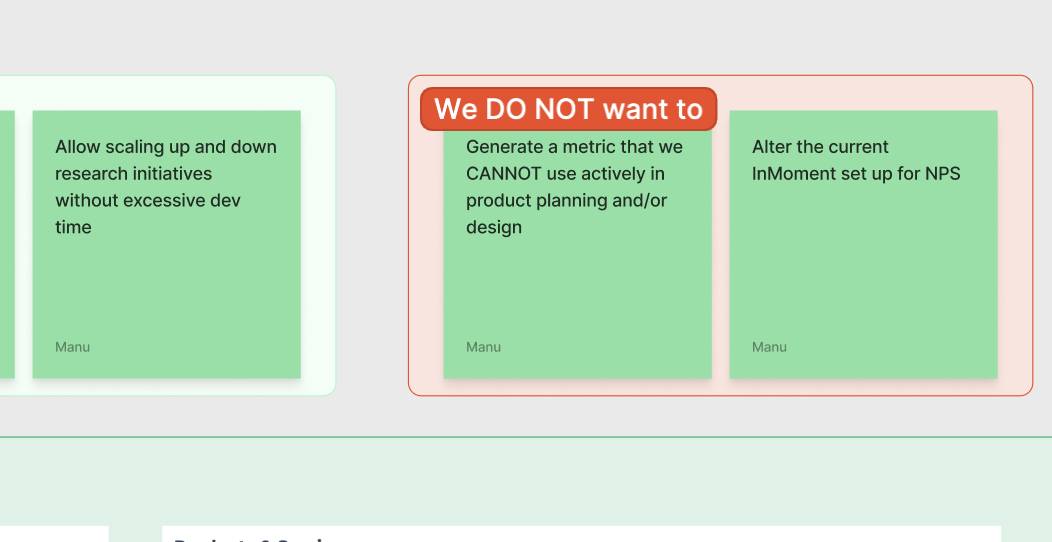

It was important for the ELT to understand the impact right tools can bring to a team’s work. We wanted tools that suited the cadence, budget and fidelity of the team. Equally, dashboards that are sharable and help generate actionable insights quickly was important.

I noted and shared the purpose of quantitative and qualitative tools and how they help us scale Research ops up and down when needed. This exercise helped address the possible concerns some stakeholders had and I was provided sign off.

Making it happen

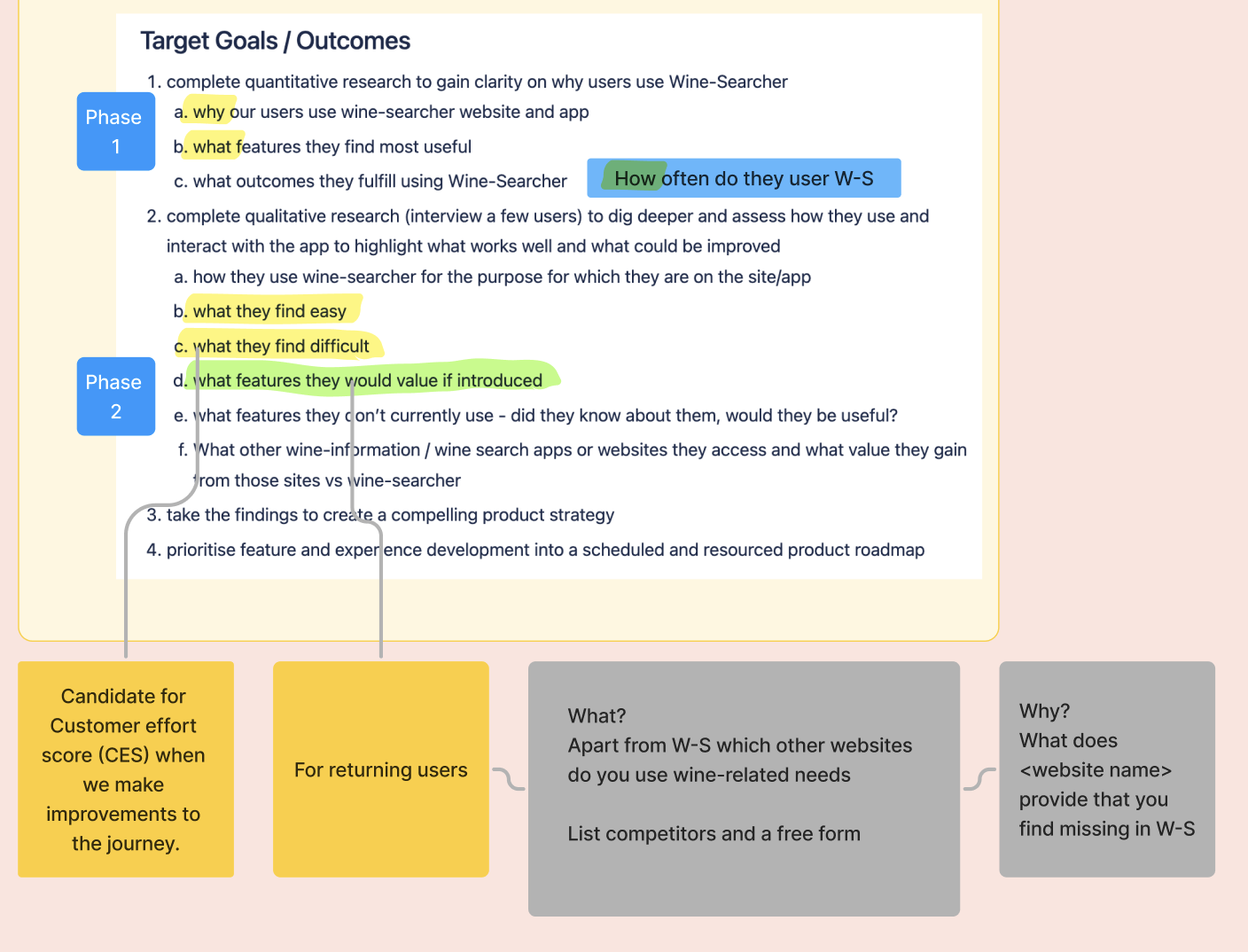

I worked closely with the Delivery Manager to breakdown the ask in to several phases.

I ran feedback sessions at the end of every phase to ensure there was alignment in the expectations between stakeholders.

I engaged with product leads of relevant domains to help understand a metric they could track in their reporting. (Example: Customer Effort Score for a newly refactored, self-servicing Store Manager)

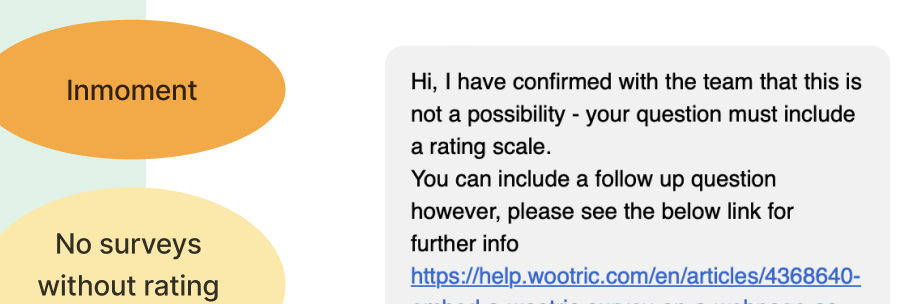

iOS and Android APPS had an established NPS metric that the board was used to. So we chose to not migrate them to the new tool.

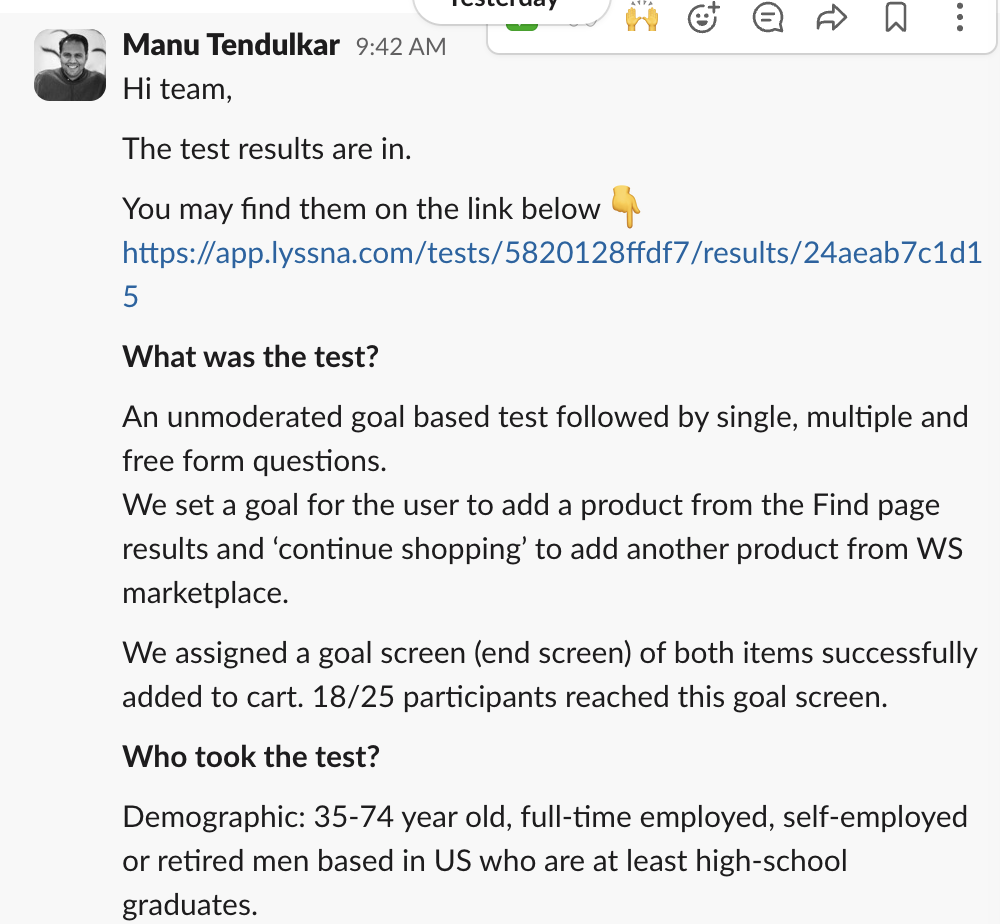

The team used these tools to generate rapid, actionable insights for many projects—including abandonment, purpose of session and marketplace testing.

The ELT too had a deeper understanding of the user behaviour and began to shed the ‘I know the user’ attitude over time.